Hi all, after I enable TLS option, the PMG only expose port 25 to public. How do I config Thunderbird then? Just indicate port 25 and no tls?

↧

How to config Thunderbird client?

↧

Hetzner Proxmox + Additional IPs + Additional Subnet

Hello!

I have Hetzner Proxmox server with config

Read more

I have Hetzner Proxmox server with config

Code:

auto ens15

iface ens15 inet static

address 37.XX.XXX.43/32

gateway 37.XX.XXX.1

pointopoint 37.XX.XXX.1

# Bridge for single IP's (foreign and same subnet)

auto vmbr0

iface vmbr0 inet static

address 37.XX.XXX.43/32

bridge-ports none

bridge-stp off

bridge-fd 0

up ip route add 37.XX.XXX.39/32 dev vmbr0

up ip route add 37.XX.XXX.40/32 dev vmbr0

up ip route add 37.XX.XXX.41/32 dev vmbr0

up ip route add 37.XX.XXX.42/32 dev vmbr0

# Additional...Read more

↧

↧

APT Status

↧

Controlling PCI Slot order in Proxmox 5.4

I'm trying to solve a very specific problem to get a Palo Alto VM-series firewall online on Proxmox (not supported by Palo Alto but should work fine in KVM). The VM itself works fine, but I'm trying to give it three specific network interfaces: One virtio interface for the dedicated management interface (net0, registered as eth0 in the VM), and then a PCI-Passthrough'd Intel 82576 PCI Express card with two NICs.

This issue is the Palo Alto VM will always take the NIC with the lowest PCI-ID...

Read more

This issue is the Palo Alto VM will always take the NIC with the lowest PCI-ID...

Read more

↧

[PVE7] - wipe disk doesn't work in GUI

HI

I have tried to wipe out an HDD which I previously using as Ceph OSD drive and get this message:

disk/partition '/dev/sdb' has a holder (500)

I could do wipefs from CLI but works only if I add -a and -f flags.

Back to web gui, I tried again and get the same error.

fdisk -l /dev/sdb

Disk /dev/sdb: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk model: VBOX HARDDISK =================================>>>> As you can see, I was testing in VirtualBox.

Units: sectors of 1 * 512 =...

Read more

I have tried to wipe out an HDD which I previously using as Ceph OSD drive and get this message:

disk/partition '/dev/sdb' has a holder (500)

I could do wipefs from CLI but works only if I add -a and -f flags.

Back to web gui, I tried again and get the same error.

fdisk -l /dev/sdb

Disk /dev/sdb: 200 GiB, 214748364800 bytes, 419430400 sectors

Disk model: VBOX HARDDISK =================================>>>> As you can see, I was testing in VirtualBox.

Units: sectors of 1 * 512 =...

Read more

↧

↧

how do I setup a password on an existing container?

Option 2 seems to be what I want

https://pmsl.com.ng/ssh-into-a-proxmox-lxc-container/

Do I change 109 to my container id?

Do I have to add a username and password somewhere?

https://pmsl.com.ng/ssh-into-a-proxmox-lxc-container/

Do I change 109 to my container id?

Code:

lxc-attach --name 109Do I have to add a username and password somewhere?

↧

Ceph HDD OSDs start at ~10% usage, 10% lower size

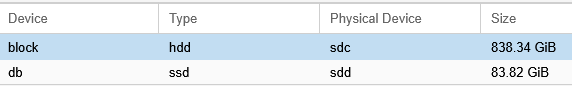

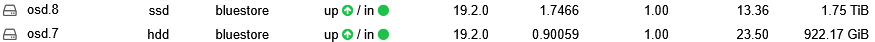

I've noticed when creating an OSD on an HDD, it starts out showing about 10% usage, and stays about 10% higher? We are using an SSD for the DB and therefore WAL but it seems like it shouldn't count that...? Also, the size is shown lower in the OSD "details" than the OSD page in PVE.

Screen cap of osd.7 details:

![1744300217829.png 1744300217829.png]()

in PVE:

![1744300320835.png 1744300320835.png]()

All the SSD only OSDs start at 0%. In this cluster all the SSDs are ~13% used and the few HDDs are all ~23% used.

(and yes we're aware of the bug...

Read more

Screen cap of osd.7 details:

in PVE:

All the SSD only OSDs start at 0%. In this cluster all the SSDs are ~13% used and the few HDDs are all ~23% used.

(and yes we're aware of the bug...

Read more

↧

Adding a Second Public Network to Proxmox VE with Ceph Cluster

Hello Proxmox Community,

I am currently running a Proxmox VE cluster with Ceph storage, and I would like to add a second public network to my configuration. Here is my current setup:

network interface:

Read more

I am currently running a Proxmox VE cluster with Ceph storage, and I would like to add a second public network to my configuration. Here is my current setup:

network interface:

Bash:

root@s1proxmox01:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host...Read more

↧

iGPU Passthrough

Hello, Can someone help me with the passthrough of the integrated Intel Iris XE GPU on the intel i9 12900H, on my mini PC please?, or can someone link me to a decent guide I can follow, I know there's this guide here: https://www.derekseaman.com/2024/07...u-vt-d-passthrough-with-intel-alder-lake.html.

But I was wondering if there's another way as I read this goes bad if you update proxmox. Please can someone give me a hand? Thanks

But I was wondering if there's another way as I read this goes bad if you update proxmox. Please can someone give me a hand? Thanks

↧

↧

proxmox-secure-boot-support removed??

↧

Allow migration and replication of disks on ZFS encrypted storage

Hi,

I came across the problem, that migration and replication does not work if the disk(s) are on a encrypted ZFS storage - which is not well documented by the way.

The problem arose since the ZFS export function in pve-storage use the -R option of zfs send and this option does not work with encrypted datasets unless you use -w as well. Since this is no option because we cannot transfer the data encrypted, since the target pool has a different encryption key etc., the question is why use...

Read more

I came across the problem, that migration and replication does not work if the disk(s) are on a encrypted ZFS storage - which is not well documented by the way.

The problem arose since the ZFS export function in pve-storage use the -R option of zfs send and this option does not work with encrypted datasets unless you use -w as well. Since this is no option because we cannot transfer the data encrypted, since the target pool has a different encryption key etc., the question is why use...

Read more

↧

[TUTORIAL] Encrypted ZFS Root on Proxmox

The Proxmox installer does not provide the ability to set up an encrypted root with ZFS, so Debian with an encrypted ZFS root needs to be installed first, then Proxmox will be added on top. This guide covers some of the caveats of doing so.

First, follow the excellent guide written here by the authors of ZFS to install Debian: https://openzfs.github.io/openzfs-docs/Getting Started/Debian/Debian Bookworm Root on ZFS.html

Disk layout for reference, they are created according to the ZFS...

Read more

First, follow the excellent guide written here by the authors of ZFS to install Debian: https://openzfs.github.io/openzfs-docs/Getting Started/Debian/Debian Bookworm Root on ZFS.html

Disk layout for reference, they are created according to the ZFS...

Read more

↧

Proxmox Datacenter Manager - First Alpha Release

We’re excited to announce the alpha preview of Proxmox Datacenter Manager! This is an early-stage version of our software, giving you a first impression at what we’ve been working on and a chance to collaborate.

What's Proxmox Datacenter Manager?

The Datacenter Manager project has been developed with the objective of providing a centralized overview of all your individual nodes and clusters. It also enables basic management like migrations of virtual guests without any cluster...

Read more

What's Proxmox Datacenter Manager?

The Datacenter Manager project has been developed with the objective of providing a centralized overview of all your individual nodes and clusters. It also enables basic management like migrations of virtual guests without any cluster...

Read more

↧

↧

Native full-disk encryption with ZFS

Hello there!

I really like the idea of having the full root-filesystem encrypted using native ZFS encryption (only).

However, as I currently couldn't find any complete writeup on on this topic, here's what worked for me (with and without Secureboot on PVE 8.1):

1. Install with zfs (RAID0 for single-disk application)

2. Reboot into ISO > Advanced Options > Graphical, debug mode

3. Exit to bash with `exit` or Ctrl+D

4. Execute following commands:

Read more

I really like the idea of having the full root-filesystem encrypted using native ZFS encryption (only).

However, as I currently couldn't find any complete writeup on on this topic, here's what worked for me (with and without Secureboot on PVE 8.1):

1. Install with zfs (RAID0 for single-disk application)

2. Reboot into ISO > Advanced Options > Graphical, debug mode

3. Exit to bash with `exit` or Ctrl+D

4. Execute following commands:

Bash:

# Encrypt root dataset

zpool import -f...Read more

↧

Bestehende Festplatte ausbauen und durch neue Platten ersetzen

Hallo,

ich muss bei meinem Proxmox-Server (aktueller Stand) eine defekte Festplatte (/dev/sdb bzw. /dev/sde) sowie eine weitere, zu kleine Festplatte ausbauen und durch neue Platten ersetzen.

Wichtig: Ich möchte die bestehende Festplatte (/dev/sda) nicht anfassen – darauf läuft Proxmox, und dort liegen auch meine Templates, Images usw.

/dev/sdv bzw. /dev/sde ist defekt, gehört aber zu einem LVM.

Ich bin momentan etwas ratlos, wie ich da quasi "am offenen Herzen"...

Read more

ich muss bei meinem Proxmox-Server (aktueller Stand) eine defekte Festplatte (/dev/sdb bzw. /dev/sde) sowie eine weitere, zu kleine Festplatte ausbauen und durch neue Platten ersetzen.

Wichtig: Ich möchte die bestehende Festplatte (/dev/sda) nicht anfassen – darauf läuft Proxmox, und dort liegen auch meine Templates, Images usw.

/dev/sdv bzw. /dev/sde ist defekt, gehört aber zu einem LVM.

Ich bin momentan etwas ratlos, wie ich da quasi "am offenen Herzen"...

Read more

↧

VM crash after update

Hi everyone, I'm in trouble, I updated from version pve-manager/8.3. 5/dac3aa88bac3f300, to version pve-manager/8.4. 1/2a5fa54a8503f96d, then I rebooted as requested, the vm migrated, but when I return to the updated ost they don't start, and if I try to restart them they don't start, what could have happened, please help me I'm ruined

↧

Interessantes Projekt für PBS: PBS-Plus

Hallo zusammen,

ich wollte euch auf ein interessantes Open-Source-Projekt aufmerksam machen, das möglicherweise für viele Nutzer von Proxmox Backup Server (PBS) nützlich sein könnte: PBS-Plus.

Das Projekt erweitert PBS um zusätzliche Funktionen und sieht insgesamt sehr vielversprechend aus. Momentan wird es nur von einer einzelnen Person entwickelt, was langfristig ein Risiko darstellen könnte – besonders falls sich in Zukunft etwas an der internen Logik von PBS ändern sollte...

Read more

ich wollte euch auf ein interessantes Open-Source-Projekt aufmerksam machen, das möglicherweise für viele Nutzer von Proxmox Backup Server (PBS) nützlich sein könnte: PBS-Plus.

Das Projekt erweitert PBS um zusätzliche Funktionen und sieht insgesamt sehr vielversprechend aus. Momentan wird es nur von einer einzelnen Person entwickelt, was langfristig ein Risiko darstellen könnte – besonders falls sich in Zukunft etwas an der internen Logik von PBS ändern sollte...

Read more

↧

↧

node restart and automatically migrate VM's (HA)

Hi Folks,

I for any reason a Node will perform a (controlled) restart... no one of running VM's are migrated to best effort free node on cluster, even VM is in a HA group !

I think this is not the behavior intended by HA .

am I wrong or may I have missed some vital information's ?

regards

Gerhard

I for any reason a Node will perform a (controlled) restart... no one of running VM's are migrated to best effort free node on cluster, even VM is in a HA group !

I think this is not the behavior intended by HA .

am I wrong or may I have missed some vital information's ?

regards

Gerhard

↧

"convert to template" generates a warning -- why?

Here is the output:

It was just one command to create the template:

.. why is it complaining that commands were combined?

Code:

Renamed "vm-9001-disk-0" to "base-9001-disk-0" in volume group "pve"

Logical volume pve/base-9001-disk-0 changed.

WARNING: Combining activation change with other commands is not advised.

TASK OK

Code:

qm template 9001↧

LXC can't snapshot or backup to PBS or NFS after adding bind mounts

First time using LXC (normally a VM guy) so this is a new issue for me. I spun up an Ubuntu/Emby container. Woohoo that worked. Took a snapshot and then stopped for the night. Over night my backup schedule ran and backed up the LXC without issue.

Next day I set up bind mounts of CIFS shares for my media as described in this post. That worked, Wooho again. Got all my media added to Emby, most of the configuration done, and tested hardware transcoding to make sure the host gpu is...

Read more

Next day I set up bind mounts of CIFS shares for my media as described in this post. That worked, Wooho again. Got all my media added to Emby, most of the configuration done, and tested hardware transcoding to make sure the host gpu is...

Read more

↧